Designing an S3 object storage system

2023-09-24T15:00:12.421ZDesigning an S3 object storage system

Simple Storage Service is the bedrock of cloud object storage. First launched by Amazon in 2006, S3 is a very popular option today for storing vast amounts of data, since it is cost-effective, simple to use, and highly durable. S3 offers what looks like, for most practical purposes, infinite space and bandwidth. So popular is Amazon's S3 that its conventions are known as the "S3 protocol", now supported by many other providers like Cloudflare, Backblaze, and Google Cloud Storage.

As such, S3 is a rewarding system to learn from. How does it achieve all this at scale? What are its core mechanisms? Where are the tradeoffs? How does it work? Let's explore what it takes to design an S3 object storage system.

Framing the design

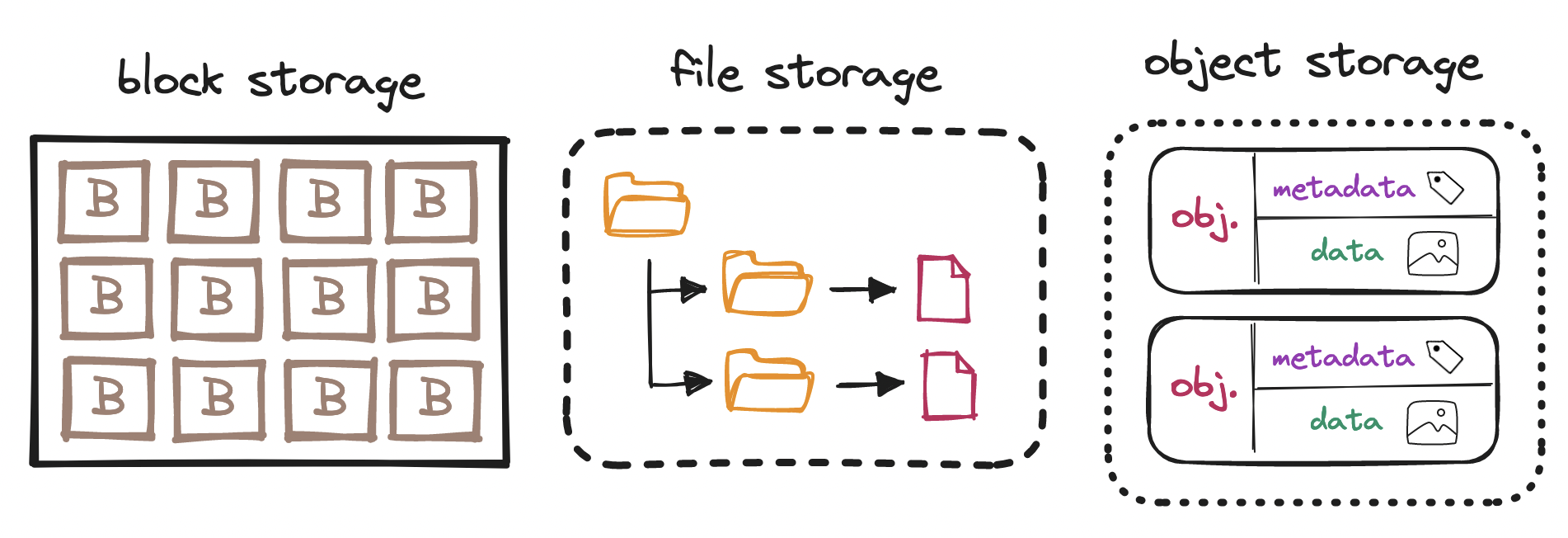

What do we mean by "object storage"? Storage systems can be classified as:

-

Block storage: Blocks are the smallest group of data that we can manage. Blocks are typically 512 bytes to 4 KiB in size, accessible by their memory address. Operating systems use block storage to manage data in file systems; databases manage blocks directly to optimize performance.

-

File storage: Built on top of block storage, file storage is a more familiar abstraction to most users. File storage enables us to manage data in files and directories laid out in a hierarchical structure, which abstracts away the complexity of direct block management.

-

Object storage: By contrast, object storage trades performance for scale at lower cost. Where block and file storage quickly read and write data by relying on a specific format, object storage more slowly reads and writes data and metadata items (together, "objects") in a plain flat structure.

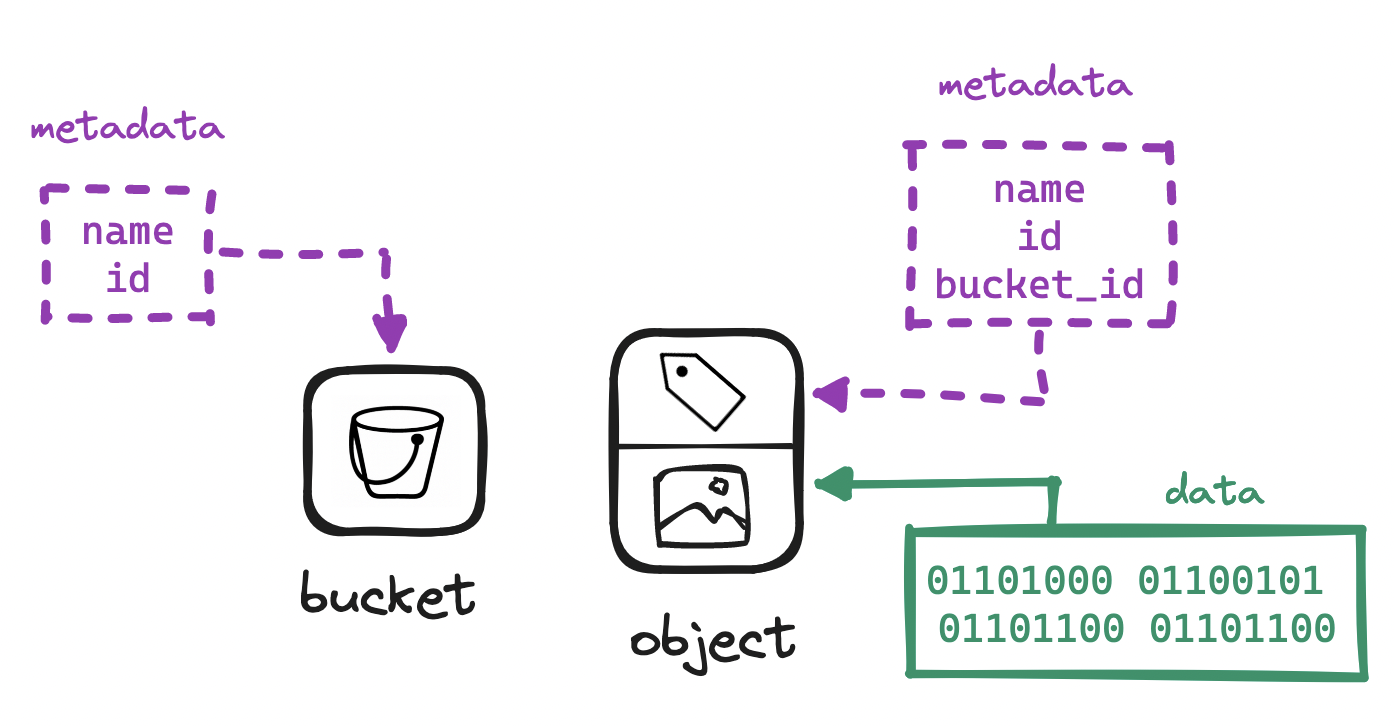

An object is an immutable piece of...

- data, a sequence of bytes, e.g. the blob of bytes of binary data that makes up an image, and

- its separate but associated metadata, key-value pairs describing the actual data, e.g. the name of the image.

Mind the immutability: we can delete or overwrite an object, but we cannot patch it. Objects are assigned URIs and most often accessed via a network request.

URI: https://s3.example.com/album/picture.png

In the example, picture.png is the object and album is a bucket. What is a bucket? Organizing vast amounts of data in a strictly flat structure can be a challenge, so S3 offers buckets as top-level containers to place objects into. Notice that buckets are not themselves data, but rather metadata common to a group of objects.

Buckets cannot be nested inside other buckets, but we can achieve nesting by having the object name resemble a filepath, with / delimiters. The section of the object name up to the last delimiter is known as a "prefix", often used when querying. For example:

URI: https://s3.example.com/album/2023/september/picture.png

Bucket name: album

Object name: /2023/september/picture.png

Prefix: /2023/september/

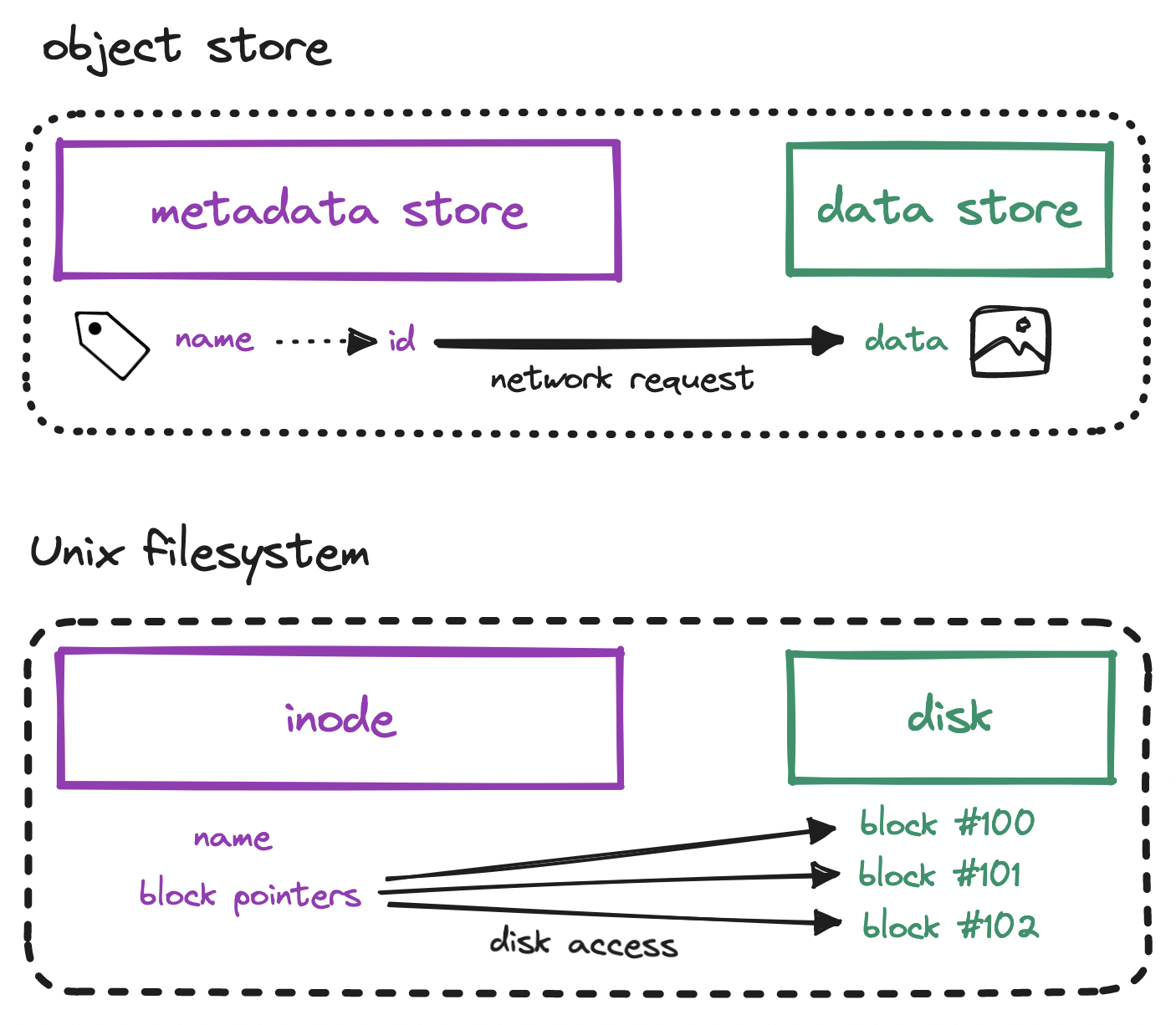

This layout where data and metadata are separate but associated is a callback to Unix filesystem design, where the inode (or index node) stores pointers to the blocks on disk that contain all the bits that make up the actual data.

High-level design

With this in mind, we can start to draft a high-level design for our S3 system:

- For reading and writing objects to disk, we need to set up a data service.

- For reading and writing metadata for those objects, a metadata service.

- For enabling users to interact with our system, an API service.

- For handling user auth, identity and access management (IAM).

That is, we need a central service that internally communicates with others that will...

- verify who the requesting user is ("authentication"),

- validate that their permissions allow the request ("authorization"),

- create a container ("bucket") to place content into,

- persist ("upload") a sequence of bytes and its metadata ("object"),

- retrieve ("download") an object given a unique identifier,

- return a response with the result to the requesting user.

We can group these six operations into three flows: bucket creation, object upload, and object download. Full-blown S3 systems support many more flows, but these three make up the core of S3 and allow us to explore how most of the system works, from top to bottom.

Let's begin by designing the object upload flow.

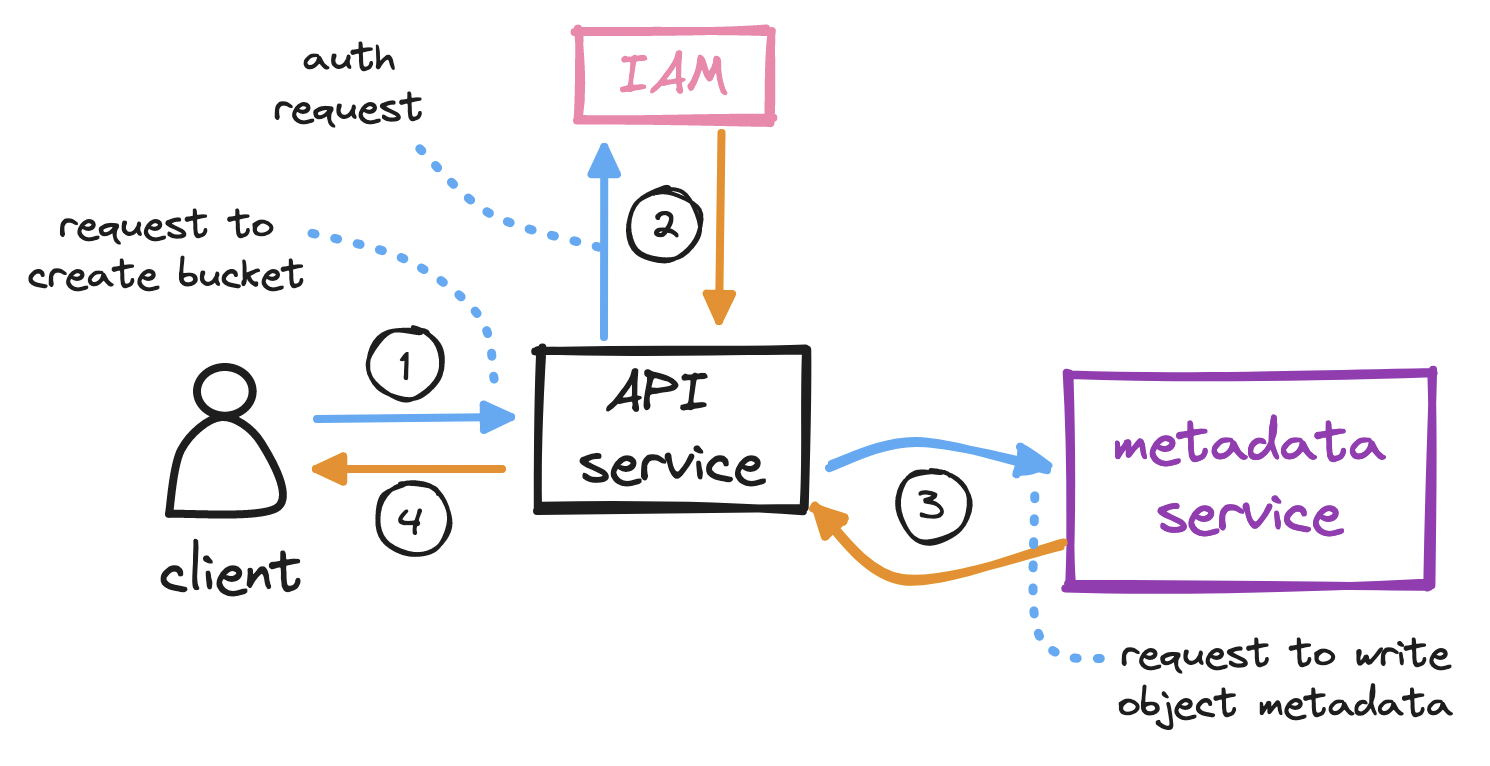

To create a bucket...

- The client sends an HTTP

PUTrequest to create a bucket. - The client's request reaches the API service, which calls identity and access management (IAM) for authentication and authorization.

- A bucket, as we mentioned, is merely metadata. On successful auth, the API service calls the metadata service to insert a row in a dedicated

bucketstable in the metadata database. - The API service returns a success message to the client.

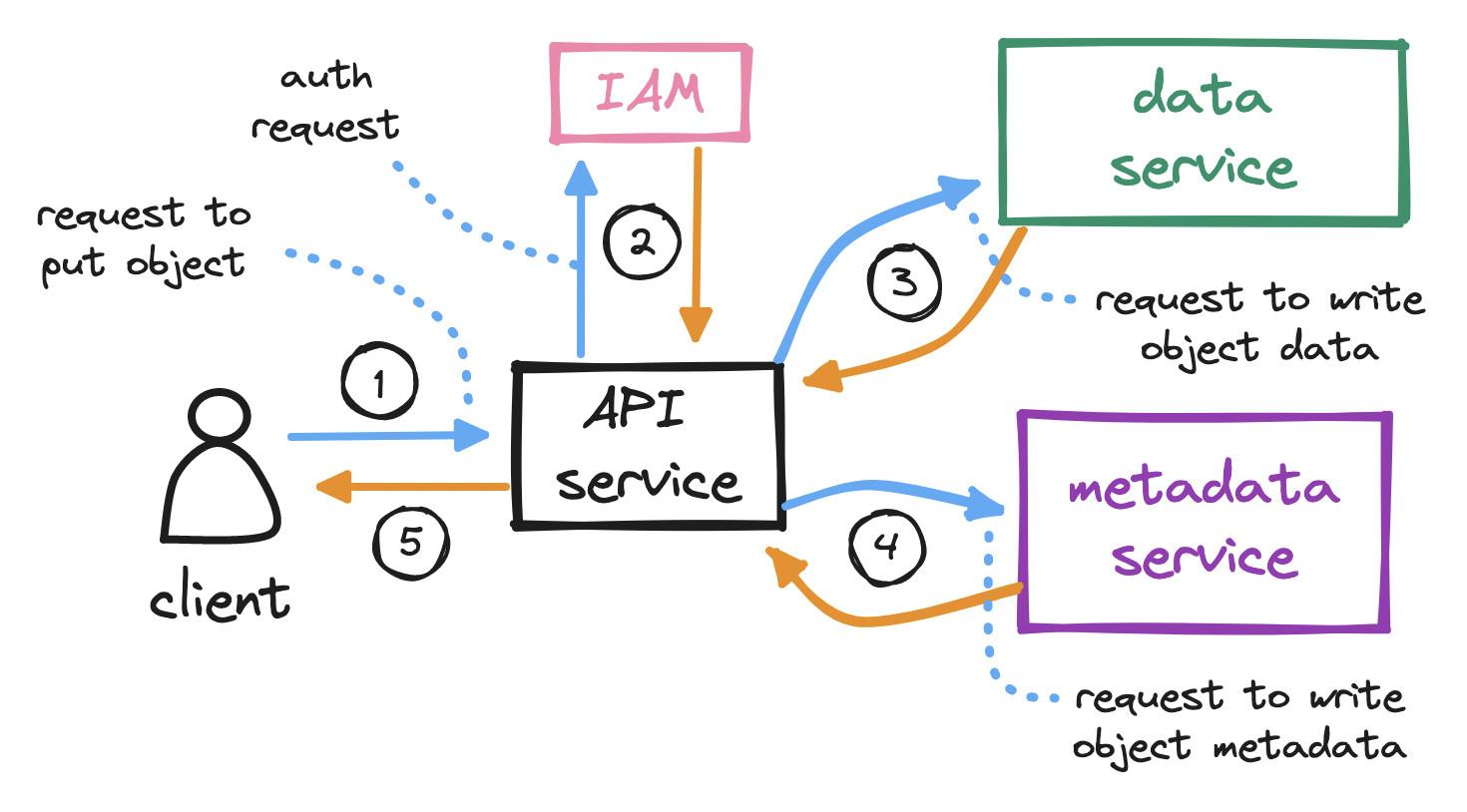

To upload an object...

- With the bucket created, the client sends an HTTP

PUTrequest to store an object in the bucket. - Again, the API service calls IAM to perform authentication and authorization. We must check if the user has write permission on the bucket.

- After auth, the API service forwards the client's payload to the data service, which persists the payload as an object and returns its

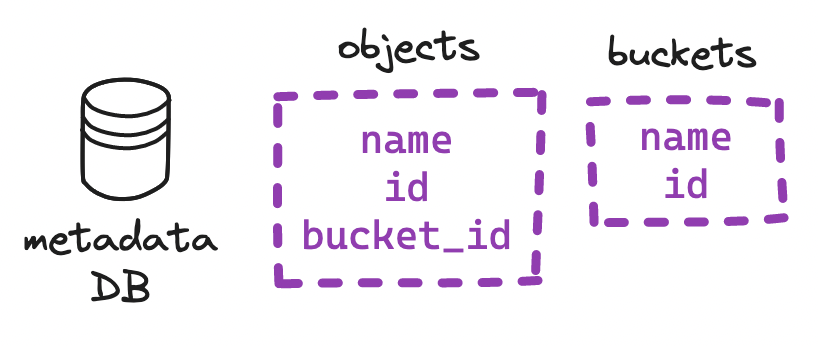

idto the API service. - The API service next calls the metadata service to insert a new row in a dedicated

objectstable in the metadata database. This row holds the objectid, itsname, and itsbucket_id, among other details. - The API service returns a success message to the client.

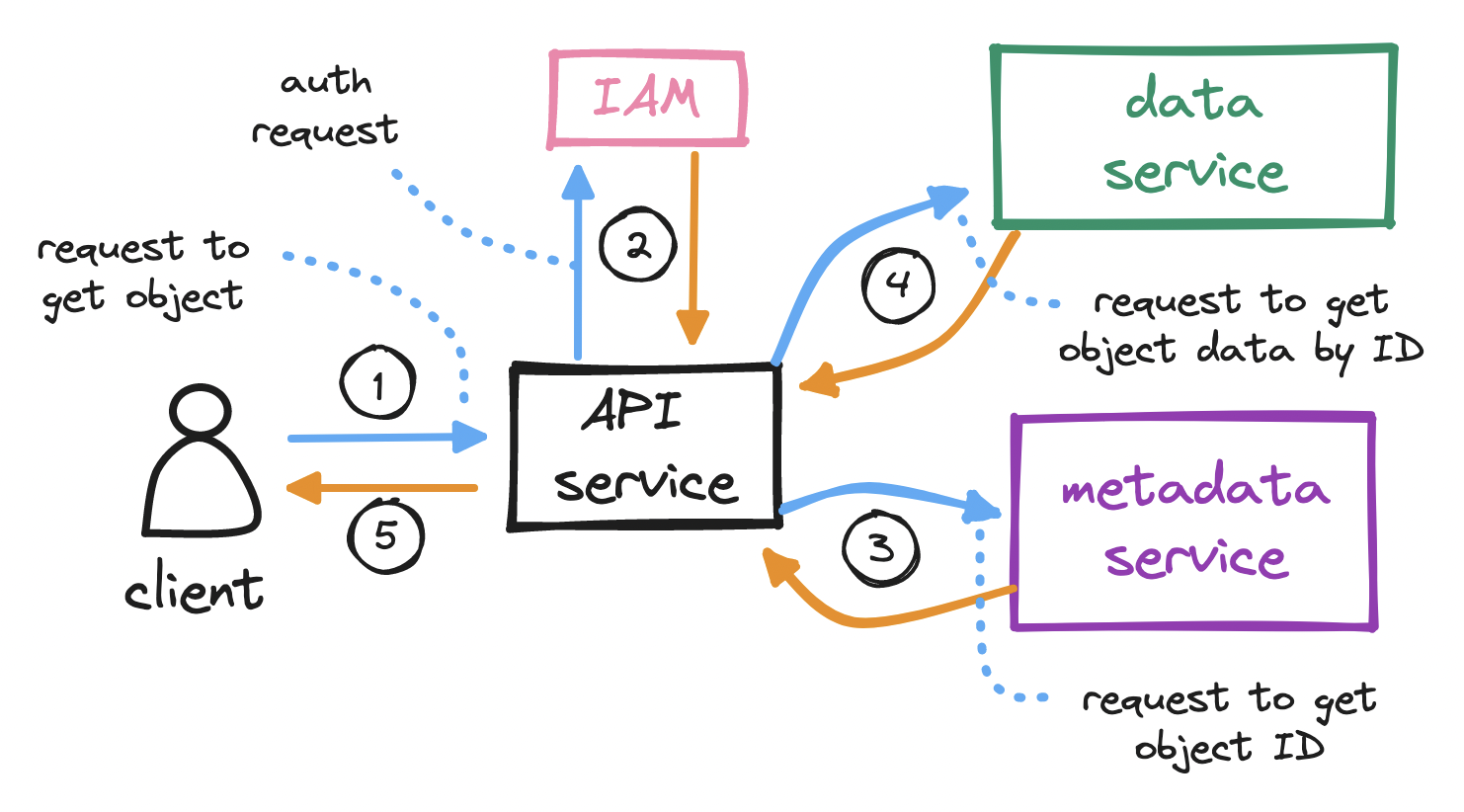

To download an object...

- With the bucket created and the object uploaded, the client sends an HTTP

GETrequest, specifying aname, to retrieve the object. - The API service calls IAM to perform authentication and authorization. We must check that the client has read access to the bucket.

- Since the client typically sends in the object

name(not itsid), the API service must first map the objectnameto itsidbefore it can retrieve the object. Hence, on successful auth, the API service calls the metadata service to query theobjectstable to locate the objectidfrom itsname. Note how, in the download flow, we visit the metadata service before the data service, as opposed to the upload flow. - Having found the object

id, the API service sends it to the data service, which responds with the object's data. - Finally, the API service returns the requested object to the client.

Low-level design

In our high-level design, two services are S3-specific and do most of the heavy lifting: the data service and the metadata service. Let's dig into their details, starting with the most important one.

Data service

The data service needs to be able to write and read a sequence of bytes to and from disk. In this context, a disk is often a hard disk drive (HDD) or solid-state drive (SSD); it can also be a network-attached storage (NAS) device, or even a virtual disk in a cloud environment.

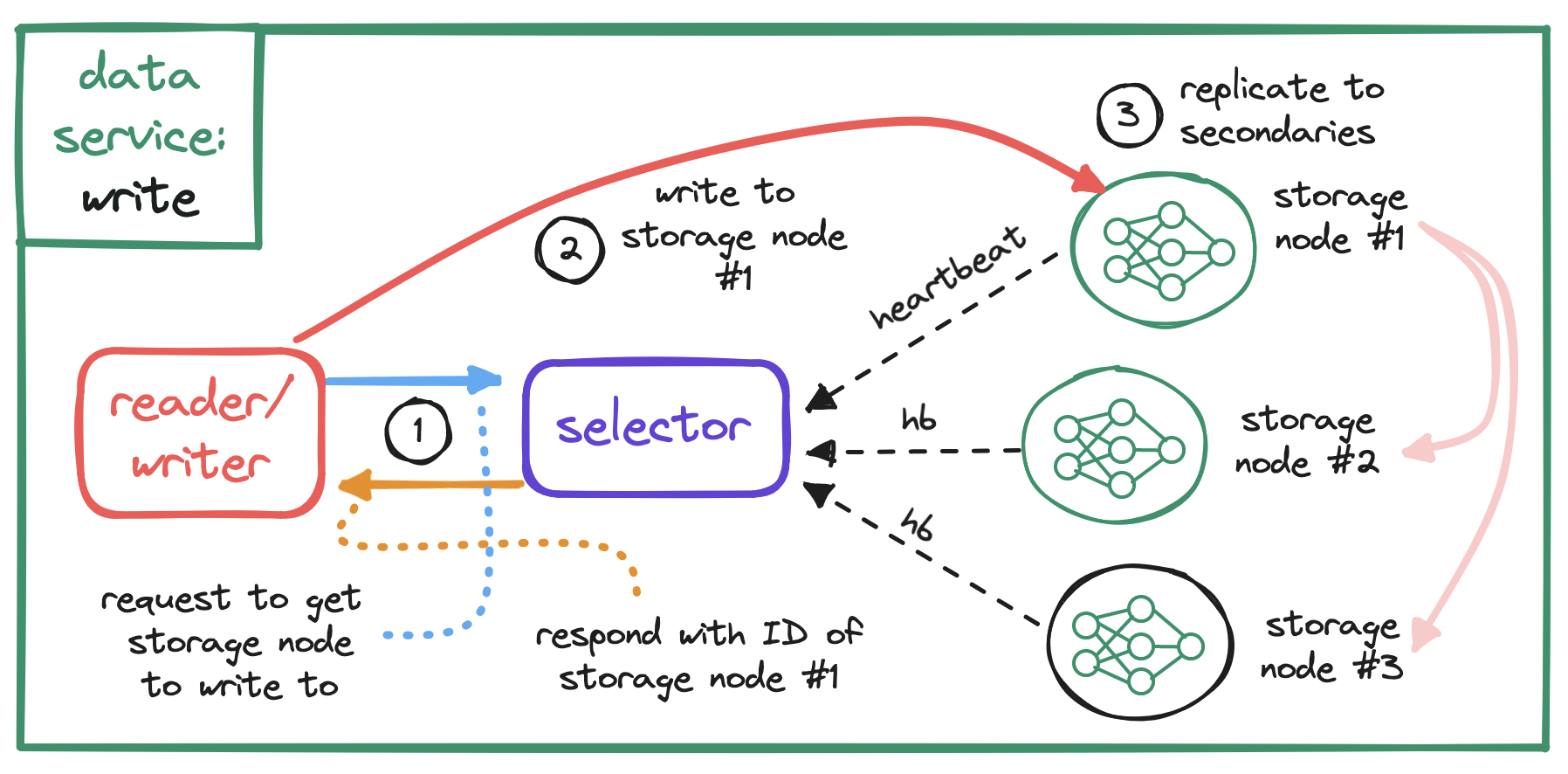

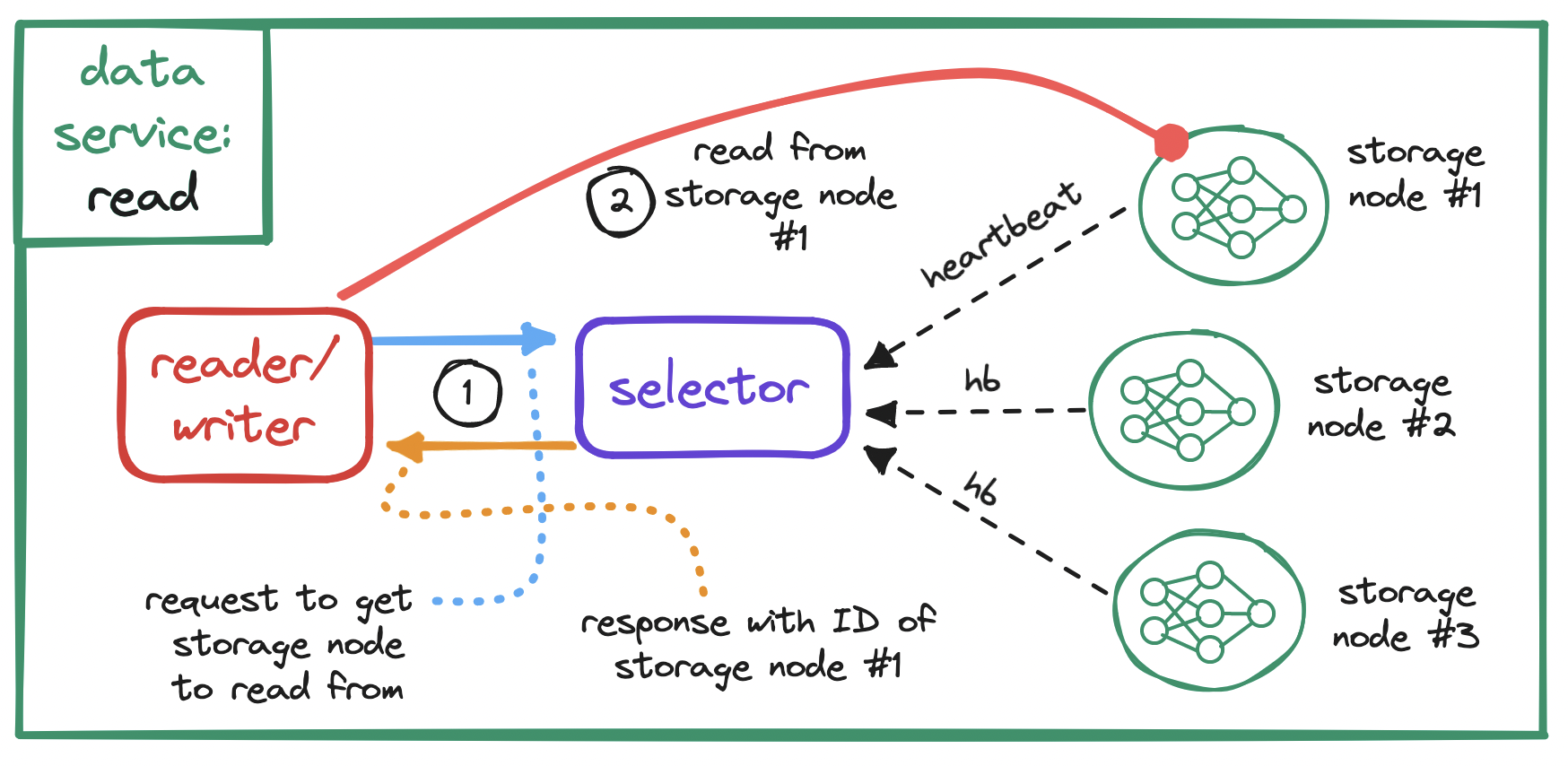

For our purposes, a storage node is a group of any such disks, i.e. a bundle of space to write to and read from, and our data service will be responsible for managing these nodes. For fault tolerance, the data service will also need to replicate data across multiple storage nodes, each sending back a heartbeat to allow the data service to tell apart healthy nodes from failed nodes.

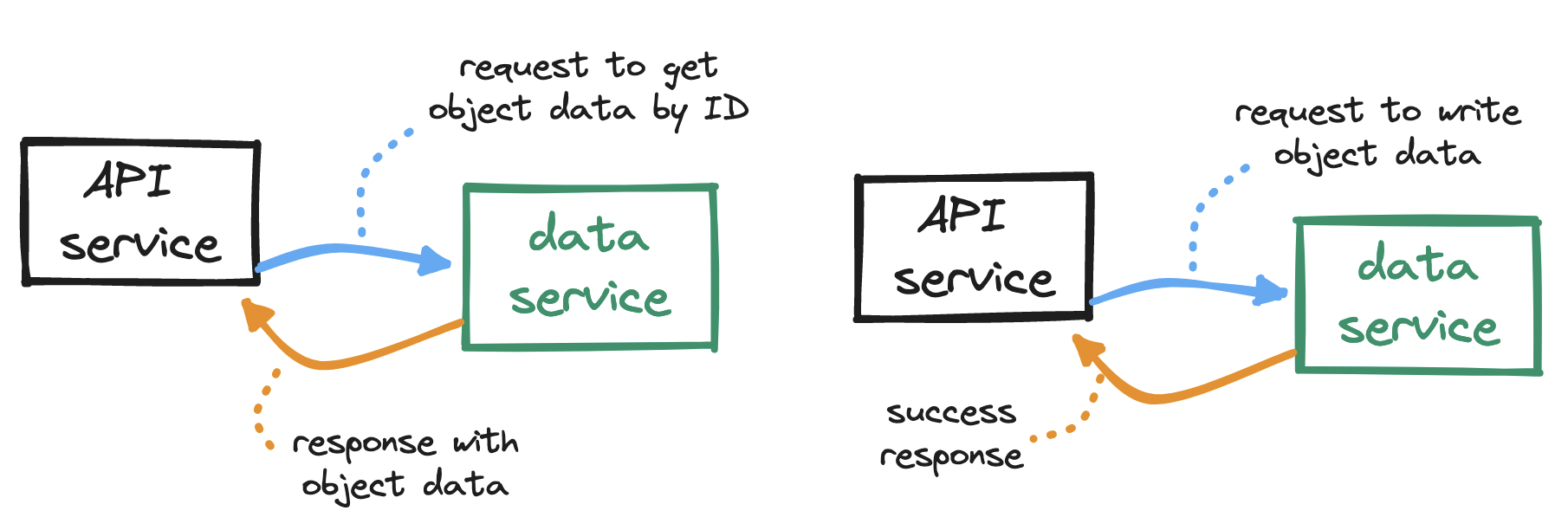

How do storage nodes fit into our design? Remember the interactions between the API service and the data service:

To serve requests from the API service, our data service will need to...

- monitor the health of all storage nodes,

- locate nodes to write to, distributing writes consistently,

- locate storage nodes to read from, distributing reads consistently,

- write objects to, and read objects from, storage nodes,

- replicate objects across storage nodes.

These operations can be grouped. For example, we can group the first three into a selector subservice, and the last two into a reader-writer subservice.

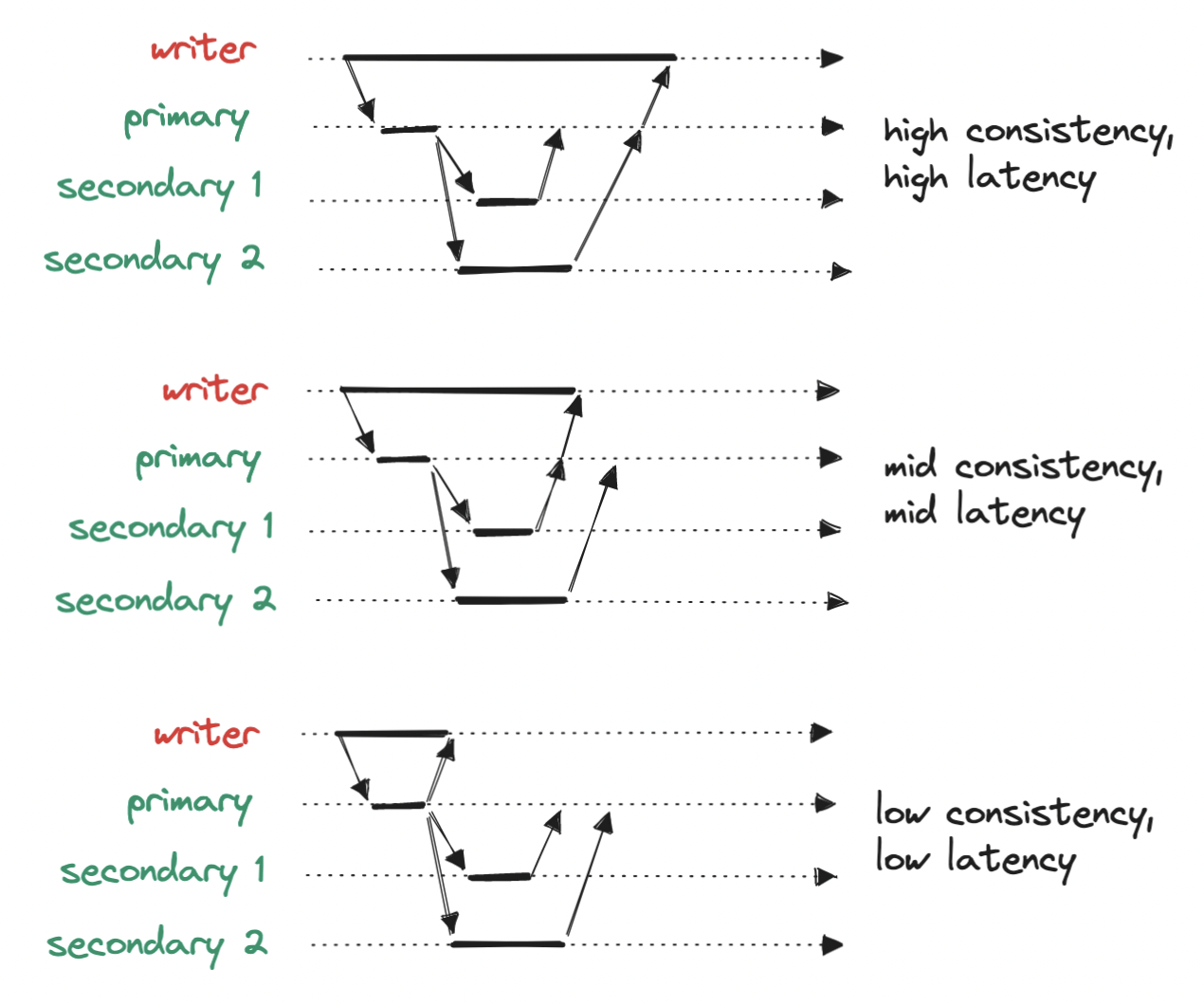

When it comes to replication, either for S3 or more generally, remember to keep in mind the tradeoff between consistency and latency. We may choose to write to a single node, respond immediately and replicate later, at the cost of data loss in case of replication failure; or we many choose to wait for partial or full replication before responding, at the cost of increased latency.

Let's keep drilling down. How exactly do we write to a storage node?

The naivest solution would be to write each object as a single file in the storage node. However, each block is typically 4 KiB in size, so any objects smaller than this will waste disk space. What we need is a more compact way to store objects, ideally similar to how blocks work, but for objects.

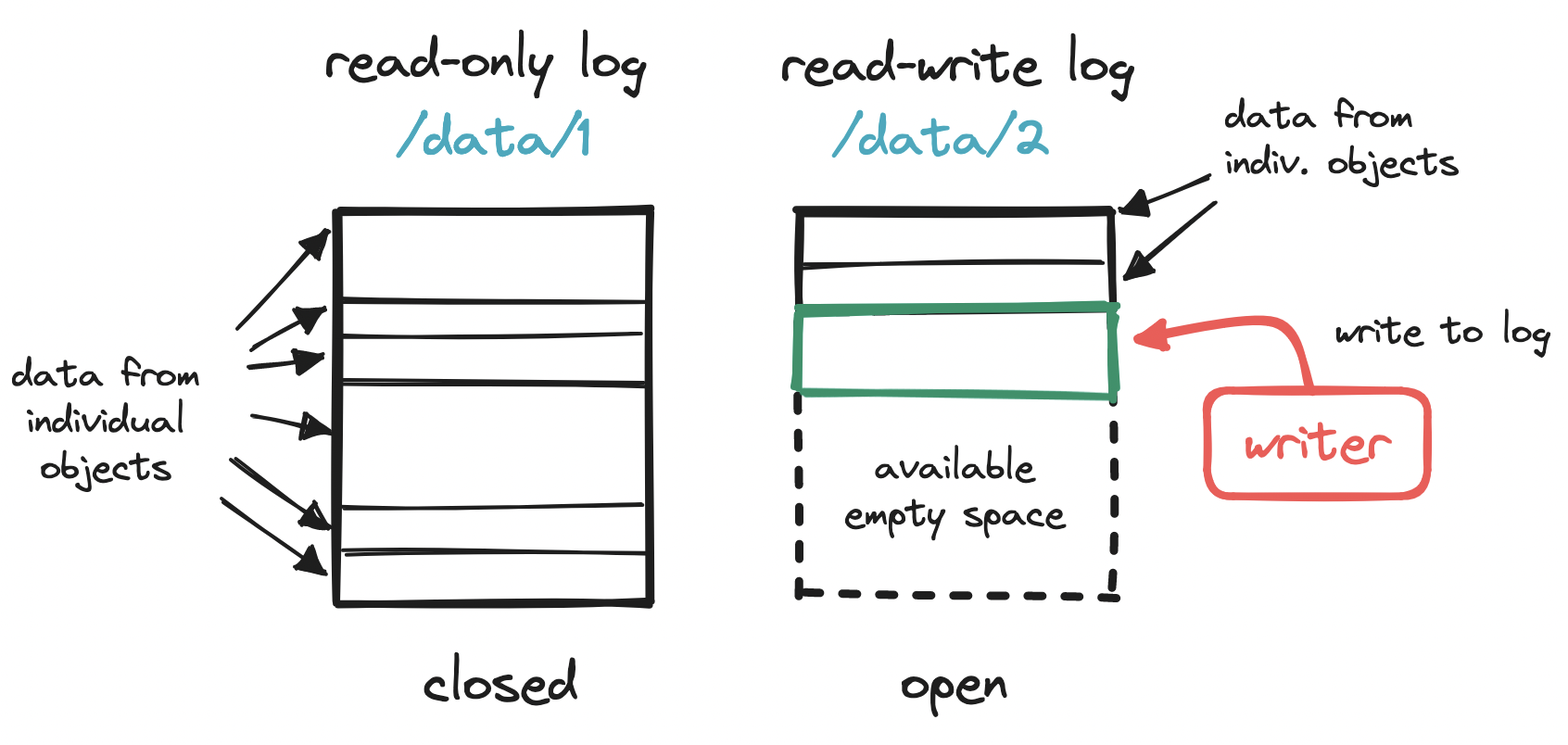

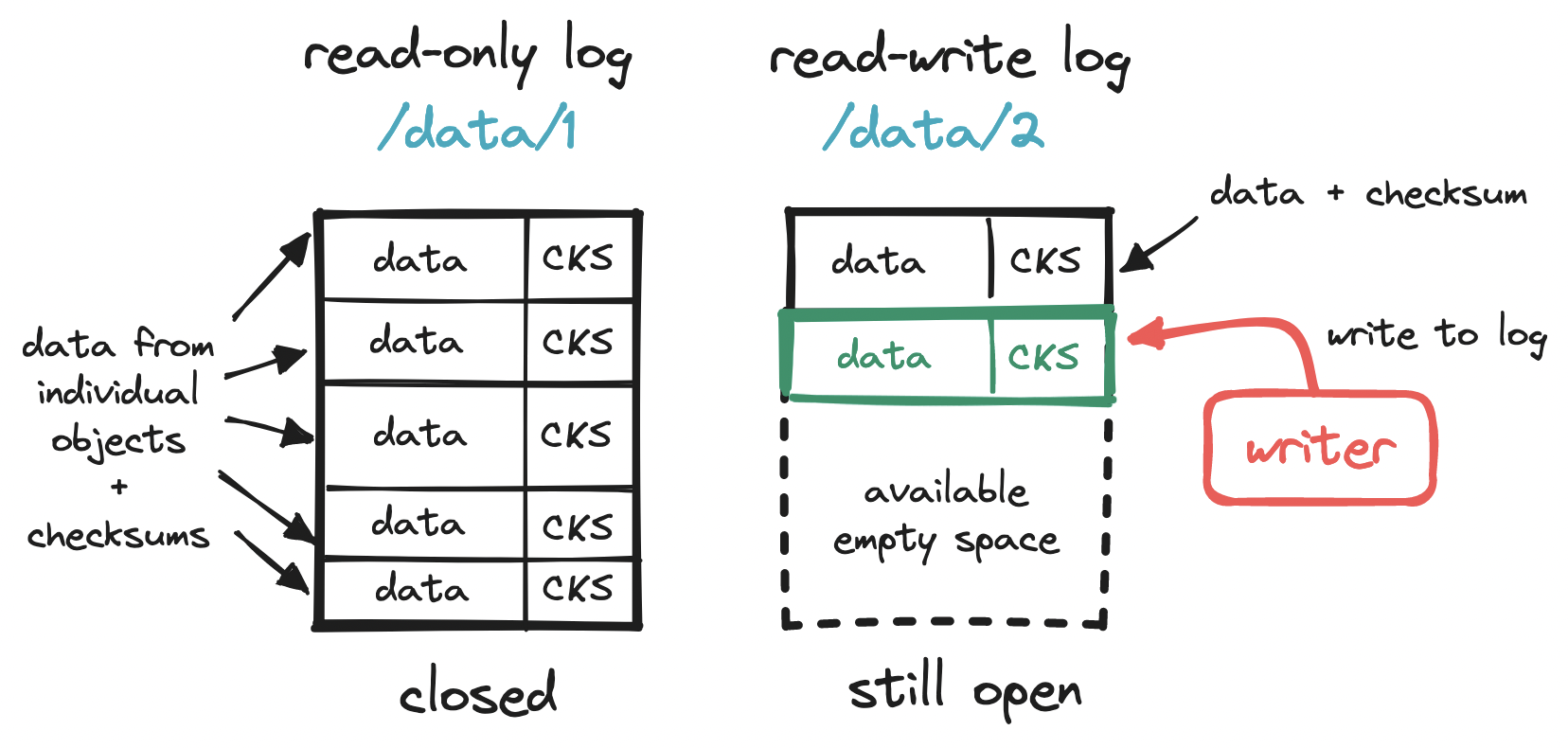

To make the most out of our disk, we can write multiple small objects into a larger file, commonly known as a write-ahead log (WAL). That is, we append each object to a running read-write log. When this log reaches a threshold (e.g. 2 GiB), the file is marked as read-only ("closed"), and a new read-write log is created to receive subsequent writes. This compact storage process is what accounts for S3 objects being immutable.

But how do we find an object across all these log files? Searching for an object with no indication of where it may have been written to ("sequential scan") is not scalable.

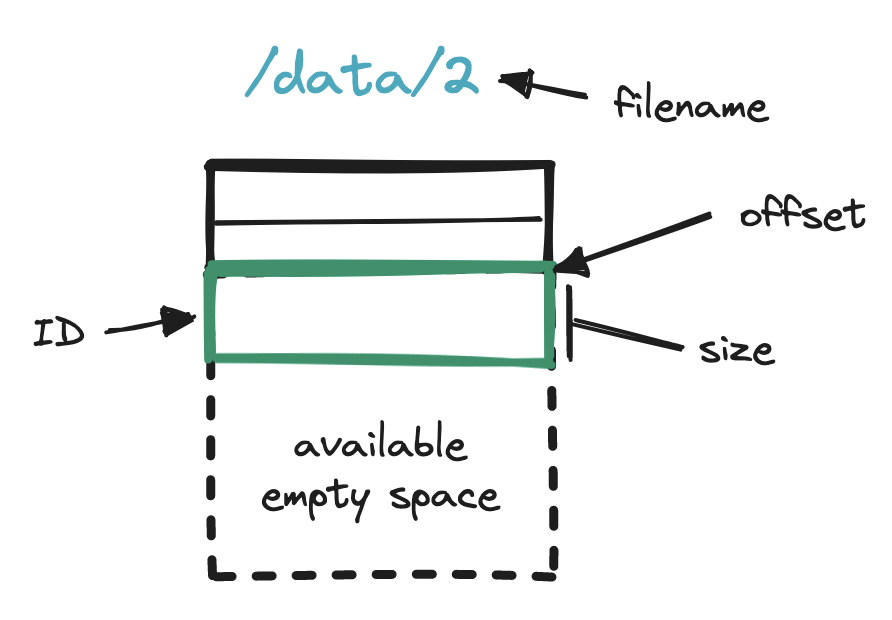

To enable fast reading, we can embed a small database in the storage node (e.g. sqlite) to hold location details. Immediately after appending an object to the running read-write file, we turn to this embedded DB and store the object id, log filename, how far away the object is from the start of the file or offset, and the object size. With these location details, we can later query the embedded DB and quickly find any object across all the log files in a storage node.

Metadata service

Our metadata service is simpler. This service will need to...

- store metadata for buckets, for the bucket creation flow,

- store metadata for objects, for the object upload flow,

- find an object's metadata given its name, for the object download flow,

Hence our two tables may look like this:

Typically, S3 systems cap the number of buckets allowed per user, so the size of our buckets table will remain bounded. If each user has set up 20 buckets and each row takes up 1 KiB, then one million users will require ~20 GiB. This can easily fit in a single database instance, but we may still want to consider provisioning read replicas, for redundancy and to prevent a bandwidth bottleneck.

The objects table, on the other hand, will grow unbounded. The number of rows, conceivably in the order of billions, will exceed the capacity of any single database instance. How can we partition ("shard") this vast number of rows across multiple database instances?

-

If we shard by

object.bucket_id, causing objects in the same bucket to end up in the same partition, we risk creating some partitions that will handle much more load than others. -

If we shard by

object.id, we can more evenly distribute the load. But remember: when we download an object, we call the metadata service and pass in bothobject.nameandobject.bucket_namein order to findobject.id, which we then use to locate the actual data. As a result, this sharding choice would make a major query in the object download flow less efficient.

Hence we need a composite key. Given that an object's identifier is a combination of its name and bucket_name, we can hash both values into a single sharding key to even out the load, while also supporting the object download flow.

Expanding our system

How would our design fare when expanding the system? Let's consider a few more common features of S3 systems and how we could support them.

Durability is a key feature of S3. To prevent object loss, we have implemented heartbeats and replication across storage nodes, but this only defends against total node failure. What about data corruption in otherwise healthy nodes?

We need a guarantee that the data we read is the same as the data we wrote. One solution is to generate a fixed-length fingerprint ("checksum") from each sequence of bytes we write, and to store that fingerprint alongside the sequence. Consider a fast hash function like MD5. Later, at the time of retrieval, immediately after receiving data, we generate a new checksum from the data we received, and compare the new checksum to the stored one. A mismatch here will indicate data corruption.

Another key feature of S3 is its vast storage space. To make the most out of our disk, we rely on a write-ahead log to store objects and a small embedded DB to locate them. With this setup, implementing object deletion is a matter of locating the object and marking it as deleted.

But a housekeeping issue remains. How do we handle garbage collection? Both deletion and data corruption will produce unused storage space, so we need a way to reclaim space that is no longer used. One solution is to periodically run a compaction process, which would...

- find the valuable sections of a set of read-only log files,

- write those valuable sections into a new log file,

- update object location details in the embedded DB, and

- delete the old log files.

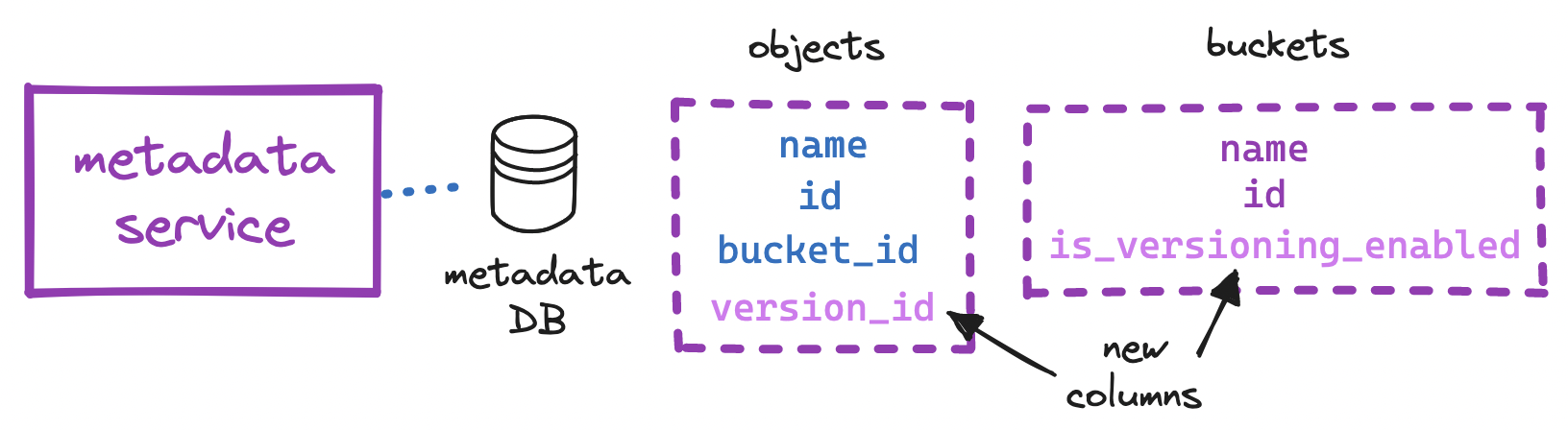

Similarly, versioning is a key feature of many S3 systems. With bucket versioning, we can store multiple versions of the same object in a bucket, and restore them - this prevents accidental deletes or overwrites. To some extent, versioning also lets us work around the immutability of the write-ahead log.

To implement versioning, we can begin by adding a is_versioning_enabled column to the buckets table and a version_id column to the objects table. At the time of insertion, instead of overwriting the existing row, we can append a new row with the same object.bucket_id and object.name as the existing row, but with a new object.id and an incremented object.version_id. With versioning enabled, deleting an object will mark the version with the highest object.version_id as deleted, and retrieving an object will look up its latest non-deleted version.

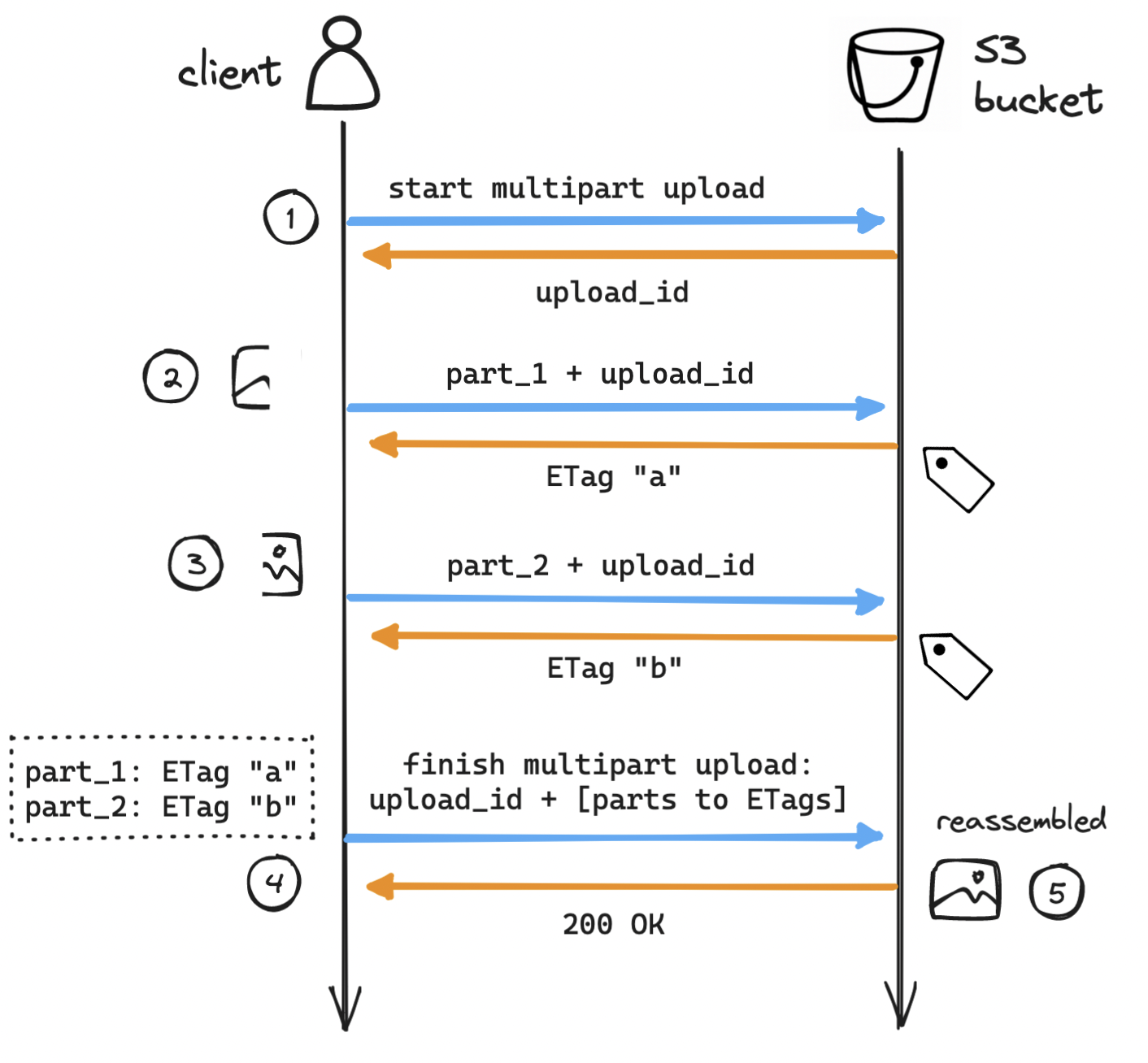

Multipart uploads are another common feature of S3 systems. With multipart uploads, we can upload objects in smaller parts, either sequentially or in parallel, and after all parts are uploaded, we reassemble the object from its parts. This is useful for large uploads (e.g. videos) that may take long and so are at risk of failing mid-upload. On upload failure, the client resumes from the last successfully uploaded part, instead of having to start over from the beginning. What could this look like?

- The client requests S3 to initiate a multipart upload for a large object and receives an

upload_id, a reference to the current upload session. - The client splits up the object into parts (in this example only two, often more) and uploads the first part labeled as

part_1, including theupload_id. On success, S3 returns an identifier (ETag, or entity tag) for that successfully uploaded part of the object. - The client repeats the previous step for the second part, labeled as

part_2, and receives a second ETag. - The client requests S3 to complete the multipart upload, including the

upload_idand the mapping of part numbers to ETags. - S3 reassembles the large object from its parts and returns a success message to the client. The garbage collector reclaims space from the now unused parts.

Going further

S3 systems are complex beasts - this discussion only touches on the bare essentials of how they work. We have explored how object storage compares to other storage types, what the core flows of S3 look like, and how to design a system to support them. We have also considered expansions to our system, such as data corruption verification, garbage collection, object versioning, and multipart uploads, and how to support them without compromising the core design.

To delve deeper into the inner workings of S3, consider:

- how to support automatic object expiration ("time to live"),

- how to list out all objects in a bucket or with a given prefix,

- how to efficiently paginate object listings from sharded databases,

- how erasure coding can contribute to data availability and redundancy.

S3 is the bedrock of cloud object storage. Learning about how it works, at both higher and lower levels, is a great way to become familiar with the core concepts behind highly available storage systems at scale.